Exploring Kadalu Storage in k3d Cluster - Operator

2021-May-03 • Tags: kubernetes, kadalu

We are picking up where we left off on the discussion about CSI Driver component of kadalu storage. After the deployment of CSI Pods it's all user facing, adding storage and provisioning PVs etc.

Today we'll be discussing about where it all starts, namely Kadalu Operator. As always I'll provide references to resources which takes away re-iterating the same concepts.

Introduction §

A kubernetes operator has functional knowledge on how to manage a piece of tech (here, it's bootstrapping glusterfs to be used in kubernetes) among others. Custom Resource Definitions (CRDs) are at the heart of an operator in which we'll be listening to events on CRD object and turn knobs in managed resource.

Below are mandatory if you didn't come across kubernetes operators or how to use kubernetes python api:

Optional:

Since we have already covered a lot of ground in previous post about Kadalu Project and prerequisites, we'll be diving directly into the kadalu operator component.

Deep dive into Operator §

We'll be using a lot of python PDB module abilities and directly follow the actions of operator in container itself and a good understanding of python debugger will be useful to follow along. Python documentation on pdb is easy to follow and self sufficient.

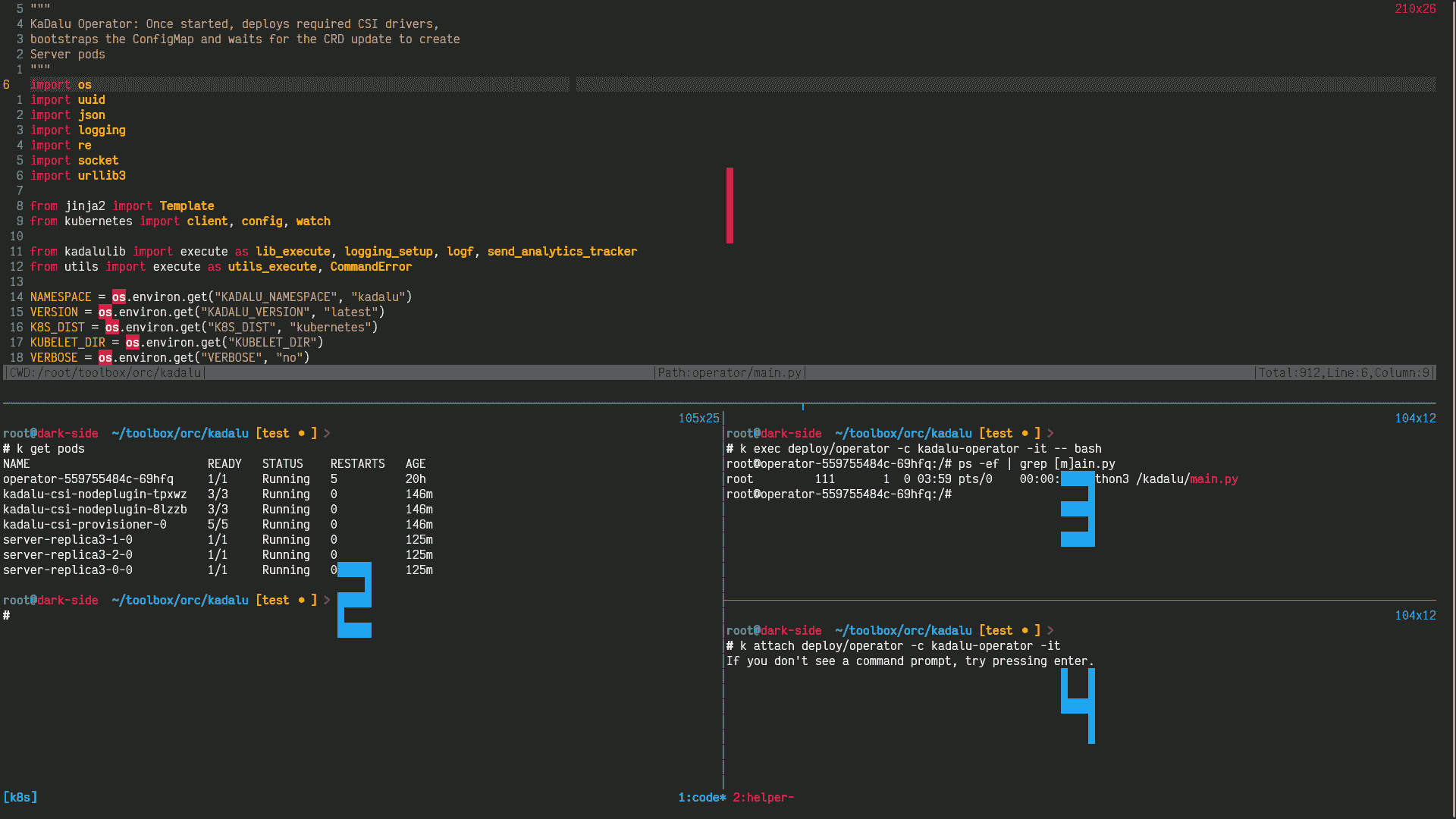

As my workflow is heavily CLI based around tmux and neovim I think below image would be helpful going forward to reference on which pane I'll be performing the tasks.

- Pane1: Any change to the source code will be performed which will be copied into the container

- Pane2: Verify the state of the cluster and to run any other ad hoc commands

- Pane3: Exec into

kadalu-operatorcontainer running onoperatorpod - Pane4: Attach to command prompt of

kadalu-operatorcontainer and make sure not to send anyKeyboardInterrupt(^C) ever

If you are interested in only knowing about how we can enter into PDB in a container, please refer below and rest of the post talks about various ways leveraging this simple concept to illustrate the workflow that happens in the operator.

-> - - - - <>

How it works?

-m pdb: invokes python interpreter in trace mode of pdb-c: provided topdbas command line argumentsimport signal: imports signal module from stdlib into pdb (as the starting word is not a pdb reserved keyword, there's no need to start the statement with!, same for next set of commands)signal.signal(signal.SIGUSR1, lambda *args: breakpoint()): registering a handler forSIGUSR1signal, (breakpoint() [in version >=3.7] =~ import pdb; pdb.set_trace())c:continue, without this pdb prompt wait for user input

Pros:

- After the signal handler is registered we can invoke python debugger by running

kill -USR1 <pid>on python process asynchronously - We'll have the ability to navigate around last ~10 stack frames and inspect arguments, variables etc.

- In general, getting into debugger of a running process without changing any source code is an added benefit, having said that nothing can replace a good logging system 😄

Cons:

- If the breakpoints are set by dropping into pdb via kill signal, no history/breakpoints will not be restored if the process is restarted with

run/restartcommands 😞

I would say above is a major con however you can evaluate both pros and cons against your use case. In quest of providing resources around pdb I find these articles are good recommendations, series on pdb and pdbrc

Actually the end, the rest of the article explains what happens in the kadalu operator in a greater detail and hang on if you want to see how we can apply above concept for a container running python program. At the time of writing this blog, HEAD of kadalu repo is at commit f86799f

Creating a k3d cluster

§

I've a newly created k3d cluster and all operations are performed on that. I usually follow this script for creating a cluster, you can modify and use the same. HEAD of forge repo is at commit 73604c

Please don't run without checking the script.

Process Monitor (Reconciler) §

Every component of Kadalu, i.e., Operator, Server and CSI depends on a core monitor process and runs business logic as subprocess and tries it's best to keep child alive.

More than often, if you are debugging any component make sure this monitor is always stable as it works behind the scenes. These monitor methods are imported into start.py in every component and triggers a subprocess main.py among others which are specific to the component.

Overriding docker entrypoint

§

More than often there'll be a confusion between docker entrypoint and kubernetes command directive, please refer this official doc once and for all to understand the difference if you have struggled earlier. At least I did find that confusing for some time 😥

In this method I did override only the entrypoint (python3 /kadalu/start.py) specified in the docker image of kadalu-operator as below:

-> git diff manifests/kadalu-operator.yaml

diff --git a/manifests/kadalu-operator.yaml b/manifests/kadalu-operator.yaml

index 985a297..9fc372c 100644

privileged: true

image: docker.io/kadalu/kadalu-operator:devel

imagePullPolicy: IfNotPresent

+ stdin: true

+ tty: true

+ command: ["python3", "-m", "pdb", "-c", "alias reg_sig tbreak /kadalu/kadalulib.py:322;; c;; signal.signal(signal.SIGUSR1, lambda *args:breakpoint());; c;;", "/kadalu/start.py"]

env:

- name: WATCH_NAMESPACE

valueFrom:

- name: K8S_DIST

value: "kubernetes"

- name: VERBOSE

- value: "no"

\ No newline at end of file

+ value: "yes"

Setting stdin and tty as true is mandatory to communicate with pdb, if these aren't set as soon as you hit (Pdb) prompt, BadQuit exception is raised and your container goes into failed state after multiple tries.

Here I'm not running any pdb commands but only registering an alias for reg_sig and if we deploy above manifest it'll wait for input and we can add any breakpoints if we wish to retain them if init process gets restarted manually or automatically.

By looking at the source I know by the time kadalulib.py is called signal module is appended to sys.path, just for convenience I'm registering the signal handler at this specific line. After deploying above manifest I'm just checking the alias and registering the signal handler.

Note:

'tbreak'is called temporary break and gets cleared after it's hit for the first time,'kubectl attach'operation is only performed onces until it times out, it's just being shown in the listings to refer to one of above mentioned panes.

> /kadalu/start.py()

()

Now we can send a USR1 signal to reach pdb prompt as below and look at stack frames.

You'll always be ending up reaching pdb prompt when the start process is sleeping, however knowing that start process is monitoring on children status I can set a breakpoint accordingly and kill the children to figure out any discrepancies while launching child process (if there are any).

-> exec / - - - --

@-67b7866747-:/# ps -ef | grep [s]tart.py

1 0 0 03:25 /0 00:00:00 - - //:322;; ;; ;; ;; //

@-67b7866747-:/# kill -USR1 1

@-67b7866747-:/#

-> / - - -

----

> <><>->None

///.8/

-> return >->None

> //

->

False

-> exec / - - - --

@-67b7866747-:/# ps -ef | grep [p]ython

1 0 0 03:25 /0 00:00:00 - - //:322;; ;; ;; ;; //

7 1 0 06:06 /0 00:00:01 //

@-67b7866747-:/#

Using initContainers and postStart

§

We are lazy (at least I'm at times 😅) and don't want to make pdb wait for input we can make use of initContainers in conjunction with pdbrc to run commands on dropping into pdb prompt. On a clean k3d cluster I've deployed kadalu-operator.yaml with below modifications:

-> git diff manifests/kadalu-operator.yaml

diff --git a/manifests/kadalu-operator.yaml b/manifests/kadalu-operator.yaml

index 985a297..8e93c82 100644

privileged: true

image: docker.io/kadalu/kadalu-operator:devel

imagePullPolicy: IfNotPresent

+ command: ["python3", "-m", "pdb", "/kadalu/start.py"]

+ stdin: true

+ tty: true

+ lifecycle:

+ postStart:

+ exec:

+ command:

+ - '/usr/bin/sh'

+ - '-c'

+ - 'while ! ps -ef | grep [m]ain.py; do sleep 1;

+ echo Waiting for subprocess to start;

+ done && umount /root/.pdbrc'

env:

- name: WATCH_NAMESPACE

valueFrom:

- name: K8S_DIST

value: "kubernetes"

- name: VERBOSE

- value: "no"

\ No newline at end of file

+ value: "yes"

+ volumeMounts:

+ - name: pdbrc

+ mountPath: "/root/.pdbrc"

+ subPath: ".pdbrc"

+ initContainers:

+ - name: pdbrc

+ image: busybox

+ command:

+ - 'sh'

+ - '-c'

+ - 'printf "%s\n%s\n%s\n%s" "tbreak /kadalu/kadalulib.py:322" "continue"

+ "signal.signal(signal.SIGUSR1, lambda *args: breakpoint())" "continue" > /pdbrc/.pdbrc'

+ volumeMounts:

+ - name: pdbrc

+ mountPath: "/pdbrc"

+ volumes:

+ - name: pdbrc

+ emptyDir: {}

After looking at above, you might be of opinion of why to go through all this hassle rather than just import signal and set a breakpoint() in command value itself.

However there might be scenarios where you would want to set an actual breakpoint using break/tbreak and ensure that's hit even after restart, in that case you need to use similar manifest as above as setting break from -c isn't working (as expected?).

Let's see what's going on in above manifest:

- Using an initContainer in conjunction with

emptyDirI'm creating apdbrcfile - As

kadalu-operatorcontainer starts after initContainer, I'm making.pdbrcfile available to it usingsubPathandpdbreads this rc file

Before explaining why lifecycle stanza is required let me show you what happens if we miss this stanza, I applied manifest and sent a signal similar to what we have done in above method.

<Handlers.SIG_DFL: 0>

|

Because of the presence of .pdbrc, pdb prompt didn't wait for user input (as last command is continue) and ran all the commands (can be referred from kubectl logs), however here's a gotcha you can see whenever we send a kill -USR1 1 signal it's dropping in pdb and reading .pdbrc and continuing the execution path, for that to not happen we need to perform below and lifecycle stanza serves the same need:

|

|

After deploying the manifest with postStart, whenever we send a USR1 signal we'll drop into pdb without any issues:

Debugging Kadalu Operator §

If you are wondering whether above methods have any connection with actual operator implementation or not then the answer would be, only a slight connection, it's just that start.py launches main.py that performs operator logic which is, the deployment of config map, storage classes, csi pods and watch event stream for any operations on kadalu storage CRD.

Until now, we are not touching python source but only adding few steps to deployment config. However there's limitation that we can't reach main.py from start.py due to below style of launching subprocess. Even if we make changes to this code and use send_signal, it's tough to manipulate multiple child processes.

# sed -n '293,300p' lib/kadalulib.py

"""Start a Process"""

=

We'll just use above limitation to our advantage and set a signal handler on main.py (kubectl cp to container) itself to reach pdb prompt knowing that if we kill child monitor just restarts the child and automatically it picks up new file (similar to what was stated in previous post).

I applied manifests/kadalu-operator.yaml without any changes and once all the below resources are created by kadalu-operator ...

; ;

... patch the deploy/operator resource to support stdin and tty using below manifest

-> bat /tmp/patch.yaml --plain

spec:

template:

spec:

containers:

- name: kadalu-operator

stdin: true

tty: true

-> kubectl patch deployments.apps operator --patch "$(cat /tmp/patch.yaml)"

Now, all the things are in place to trace the steps that operator performs, I made below changes and copied the source to kadalu-operator container

# git diff operator/main.py

diff --git a/operator/main.py b/operator/main.py

index 0a13e27..7d1509d 100644

# ignore these warnings as we know to make calls only inside of

# kubernetes cluster

urllib3.disable_warnings()

-

+ import signal

+ signal.signal(signal.SIGUSR1, lambda *args: breakpoint())

+ from time import sleep

+ sleep(10)

main()

We can delete the resources created by the operator, kill main.py to pick up changed file and before execution goes past sleep(10) we need to send a USR1 signal as below

; ;

| | |

| | |

Finally we are in pdb prompt and main.py execution is stopped and refer below listing and inline comments to figure out the steps being performed by the operator

-> / - - -

----

> //<>->None

->

//<>

->

> //<>->None

->

.

904 # This not advised in general, but in kadalu's operator, it is OK to

905 # ignore these warnings as we know to make calls only inside of

906 # kubernetes cluster

907

908

909 ->

910

911

912

# ----------------------------------------------------

# Setting temporary debug points at important methods

# just before deploying resources

# ----------------------------------------------------

1 //:806

2 //:773

3 //:841

4 //:745

# -----------------------------------------------------------------

# First, an uid is generated and kadalu-info configmap is deployed

# All yaml files are pre-made using Jinja2 templating and values

# are filled before applying those manifests

# -----------------------------------------------------------------

1 //:806

> //

-> = Let's add some storage to kadalu and see how they are being handled in brief. I'm using paths on same node just as a demo. As you can see from below above breakpoint is hit when storage is being handed and we just need to watch kubernetes event stream (using python api), it's that simple 😄

-> bat ../storage-config-path-minimal.yaml --plain;

---

apiVersion: kadalu-operator.storage/v1alpha1

kind: KadaluStorage

metadata:

name: replica3

spec:

type: Replica3

storage:

- node: k3d-test-agent-0

path: /mnt/sdc

- node: k3d-test-agent-0

path: /mnt/sdd

- node: k3d-test-agent-0

path: /mnt/sde

-> kubectl apply -f ../storage-config-path-minimal.yaml

kadalustorage.kadalu-operator.storage/replica3 created

()

()

> /kadalu/main.py())

)))

()

()

Operator deploys one pod for every storage path specified above which acts as a backend gluster brick, config map is updated with volume (replica3) info and upon receiving any request for PVC on this backend volume, a subdir corresponding to PVC will be created and served as storage for PV and this connects us back to previous post on CSI Driver

====

# Broken into new lines for readability

}

}

}

Operator watches event stream for three operations as stated below and dispatches to the method which handles that operation

744 # sed -n '745,770p' operator/main.py

745

746 """

747 Watches the CRD to provision new PV Hosting Volumes

748 """

749 =

750 =

751 =

752 753 754 755 756

757 =

758 =

759 =

760

761 continue

762 =

763 =

764

765

766

767

768

769

770

Finally, most if not all the operations that we have seen from pdb can easily be viewed from logs however this'll come handy when you are debugging operator or any python application in general.

We'll be covering how kadalu manages/uses glusterfs underneath and provides a streamlined process for consuming storage in kubernetes workloads in a later post.

Summary §

At times pdb can be hard to manage however if you can pick one of the workflows that suits you and spend some time refining it, you'll be learning a lot and effectively reducing the feedback loop between development and testing cycles. One tip would be, if you are dropped into pdb when the main process is sleeping go up (u) the stack and step (s) into the process.

The concepts shared here can be used in any normal python program and I felt it'd be helpful to use these concepts in real-time to breakdown and understand complex piece of software one step at a time.

I hope this helped in cementing some of the operations performed by a typical kubernetes operator and motivate you to contribute to open source projects.

Send an email for any comments. Kudos for making it to the end. Thanks!